Splunk Data Migration Migrating from Single Instance to Indexer Cluster

A single instance deployment is often a good approach for testing and POCs.

It might even work for smaller environments as it handles all aspects of Splunk including indexing and search.

However, the majority of the production deployments require a highly-scalable analytics infrastructure that a single-instance Splunk cannot handle.

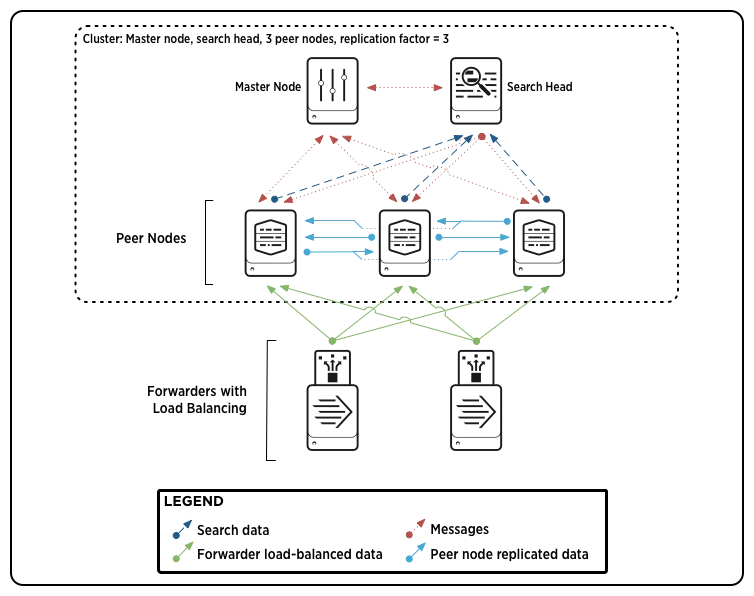

An example of a cluster can be – one or more instance getting real-time data while other instances search and manage cluster.

Several customers start their Splunk POC deployment as a standalone OR single instance deployment. Once the POC is done, scaling it from a stand-alone instance is crucial to be production-ready. One of the major challenges is the migration of data.

There is no standard procedure for data migration. The cluster needs to create multiple searchable and non-searchable copies of the buckets to fulfill the cluster’s replication factor (RF) and search factor (SF), which will take huge amount of time and processing based on the size of the data.

Buckets available on the indexer prior to its being added to the cluster are called “standalone” buckets. These buckets do not get replicated because the cluster does not replicate any buckets that are already on the indexer. However, searches will still occur across those buckets and will be combined with the search results from the cluster’s replicated buckets.

To migrate data, it is not advisable to add the ‘standalone’ instance to the cluster. It is advisable to create a new Indexer cluster and copying the cluster with the data and the configs. This will always ensure backup in case the data migration process fails.

Few things to keep in mind before migrating from standalone instance to Cluster environment:

Naming convention for Standalone bucket: db_latesttime_earliesttime_idnum

Naming convention for Clustered buckets: db_latesttime_earliesttime_idnum_GUID

Where,

latesttime is the timestamp of the latest event in the bucket

earliesttime is the timestamp of the earliest event in the bucket

idnum is unique bucket ID number GUID is the GUID of the indexer where bucket resides/originated – this can be found in $SPLUNK_HOME/etc/instance.cfg

GUID is the GUID of the indexer where bucket resides/originated. You can find this in $SPLUNK_HOME/etc/instance.cfg

Before migrating data, validate the following:

On Standalone Instance:

Ensure data ingestion has been stopped – Because of this there won’t be any data coming during migration and no buckets will be lost during the process

Roll all the hot buckets to warm buckets. You can just restart Splunk and the buckets will be rolled.

On Clustered instance:

Ensure ingestion is happening on clustered indexers with no errors (as soon as it is stopped on standalone instance)

Make sure that the configs (files) from standalone instance has been applied to all clustered indexers. Especially index definitions. Push the configs using master node (Do not use any config management tools)

Steps to migrate data from a standalone instance to cluster:

Copy all the data from standalone instance to clustered instances using any of the following approaches:

Use SCP or RSYNC to copy data

Upload data in Amazon S3 buckets and then using AWS CLI to download it on your new instances. (It is not recommended to SCP or download the data in the DB path, instead move the data into the tmp directory and rename the buckets while you move them to DB path)

Put all buckets in a single indexer and rebalance the cluster to evenly distribute the buckets OR Evenly distribute buckets manually in the first place and then rename buckets. (both will work the same way)

Start Maintenance Mode in Cluster Master

Stop Splunk on one of the indexers

Rename the buckets to append the GUID (db_latesttime_earliesttime_idnum_GUID) (Ensure all of the IDs are unique – If there is a single same ID then indexer won’t start)

Start Splunk on the indexer

Rebalance the cluster to distribute primaries if all buckets are in a single indexer

Using this approach we can migrate data from standalone instance to multiple instances. Given the sensitivity of the task as the ingestion on standalone instance is stopped during the process, a simple mistake can affect Splunk and since this process is not fully supported by Splunk, it is recommended to contact Splunk-certified Professional Services Consultants to complete the data migration.

Also read: Understanding Splunk Architectures and Components

Also read: Centrally Monitor Splunk Platforms – A Pragmatic Solution

Author

DHRUV PATEL

Dhruv Patel is working as a Senior Software Engineer at Crest Data. He has 3+ years of experience in technologies like Splunk, Docker, AWS, etc. He is a Splunk Certified Architect and AWS Certified Solutions Architect. He currently works as Site Reliability Engineer leading a team to manage 24×7 Splunk operations. He holds a Bachelor’s degree in Computer Engineering from Gujarat Technological University, India.