How to Onboard Pub/Sub Data from Google Cloud Using Splunk Add-on

We as a Splunk admin, receive multiple onboarding requests.

These can be from various data sources which may include API data or any cloud data. As we are aware that these days we are multiple customers are interested to see Google cloud-based data and metrics into Splunk so it is good for us to understand the process for the same.

The Splunk Add-on for Google Cloud Platform allows a Splunk administrator to collect Google Cloud Platform events, logs, and performance metrics data using Google Cloud Platform APIs. We can categorize the process in the below steps so that it can be easy for all team members to follow. Basically there are two phases to on-board the logs :

Setting up addon on Heavy forwarder or any Parsing layer (Make sure that instance has access to the internet in order to pull the data).

Setting up Google Cloud Account with valid keys (preferred Editor based permission). We also need to make sure that valid service is also associated with that account and subscription in order to get authenticated.

Here we are going to see the method for onboarding of Google Cloud Pub/Sub to Splunk using the add-on.

Splunk platform requirements

Because this add-on runs on the Splunk platform, all of the system requirements apply to the Splunk software used by the customer.

A. Setting up add-on on Heavy Forwarder or Indexer for creating inputs.

This add-on requires heavy forwarders to perform data collection via modular inputs and to perform the setup and authentication with Google Cloud Platform.

Download the add-on from splunkbase using https://splunkbase.splunk.com/app/3088/ Make sure that the download version is compatible with our current platform version of Splunk Enterprise.

Extract the .tar file from the downloaded version of add-on.

Before installing this add-on to a cluster, make the following changes to the add-on package:

Remove the `eventgen.conf` files and all files in the samples folder

2. Remove the `inputs.conf` file as this might send the data to platform generated from eventgen app.Place the file under `$SPLUNK_HOME/etc/-apps/` folder. (Please note that this may be specific to requirement).

Set up the add-on using configuration files

Configure credentials of the Splunk Add-on for Google Cloud Platform by completing the following steps:

Create a file named google_credentials.conf under

$SPLUNK_HOME/etc/deployment-apps/Splunk_TA_google-cloudplatform/localCreate a stanza in

google_credentials.confusing the following template[Test_Blog]google_credentials = <This is a Key provided by user># Google service account key that is in json format and can be downloaded from Google admin console

We can configure proxy as well, however it is not needed in our case.

Configure Cloud Pub/Sub inputs using the configuration file

Follow these steps to configure Cloud Pub/Sub inputs.

Create a file named

google_pubsub_inputs.confunder$SPLUNK_HOME/etc/deployment-apps/Splunk_TA_google-cloudplatform/localCreate stanzas using the following template.

[Test_Blog]google_credentials_name = <value>#Stanza name defined in `google_credentials.conf` (Make sure it is derived from credential name)google_project = <value>#This will be given by user (Please note that Project is mandatory in order to create any input, else there will be error for EXEC PROCESSOR which is via modular input.)google_subscriptions = <value>#This will be given by user (Please note that Subscription must be associated with Project)index = <google_cloud_staging>#This is a separate index for indexing google cloud data.There will be another file created as `passwords.conf` post creation of `google_credentials.conf` which will have credentials information in hashed format.

B. Setting up Google Cloud Account with valid keys (in this case it will be done by user however I am adding this point for learning perspective)

Now it is time to setup up account for google cloud with service account and valid keys associated with it.

Login to your google cloud console and navigate to your project where we want to configure Pub/Sub input.

Navigate to Service Account under IAM & Admin section in order to create a key. Here my project name is My Project 1

Click on “CREATE SERVICE ACCOUNT” and enter service account name.

Select role for Pub/Sub Editor and then click on create keys. Make sure we have selected JSON format for key while creating. This will be later used in `google_credential.conf` file

Once the key is downloaded then copy and paste the content under stanza like:`[Test]

google_credentials = {“type”: “service_account”,”project_id”: “Test “,”private_key_id”: “XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX”,}`Now we will move forward with publishing the message for the subscription associated with Topic in order to see data in Splunk.

Here I have created a Subscription with name Demo where we will publish the data in Splunk.

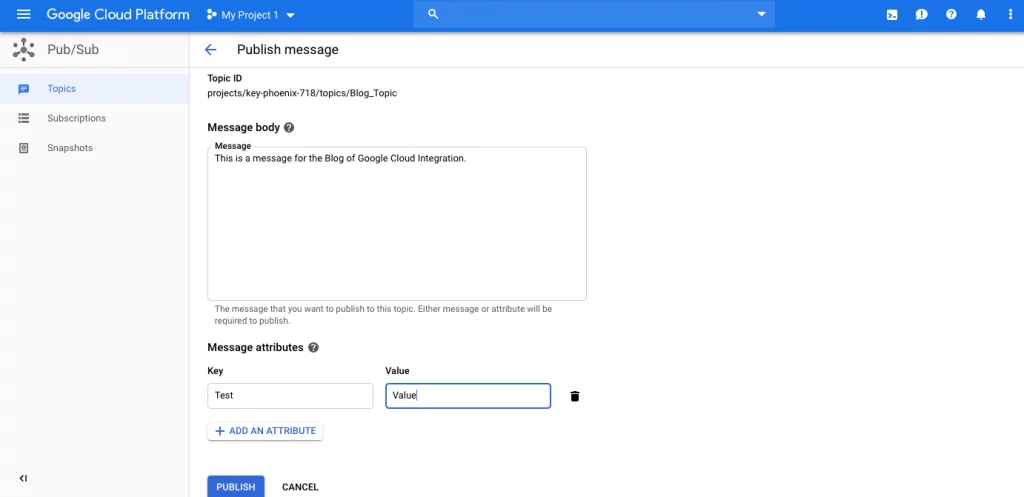

Now publish a message for your subscription

Blog_Subscription

We can see the posted data in Splunk for the same Blog_ Subscription. We can also see that sourcetype is highlighted as `google:gcp:pubsub:message`

By using above method team can simply onboard the inputs for Google Cloud addon data for Pub/Sub inputs. Kindly reach us to know if in case anyone has any questions with the same.

Happy Splunking !!

Also read: Debugging Splunk App/Add-on Using Python Debugger

Author

RISHABH GUPTA

Rishabh is currently working as Splunk and Security Professional Services Consultant at Crest Data. Rishabh has been consulting with Fortune 500 and global enterprise customers and has been an active member of Cyber Security and Infrastructure Reliability communities for 6+ years. Rishabh is an accredited consultant for Splunk Core, Splunk Enterprise Security, Splunk UBA for SIEM and Phantom for SOAR based applications. He has been a frequent speaker at DefCon and other Cyber Security Conferences.